Google to “Supercharge” Google Assistant with Large Language Models

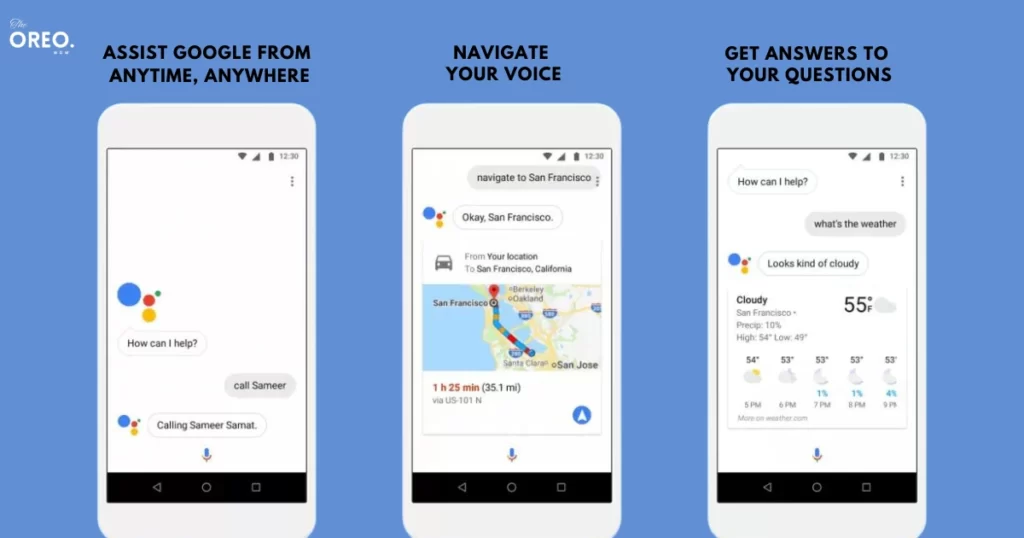

Google is reportedly considering using large language models (LLMs) to “supercharge” its virtual assistant, Google Assistant. LLMs are types of artificial intelligence trained on massive datasets of text and code.

This allows them to generate text, translate languages, write different kinds of creative content, and answer your questions in an informative way.

Google is exploring ways to use LLMs to make Google Assistant more helpful and versatile.

For example, Google Assistant could understand natural language more effectively, so that it can answer questions more accurately and conversationally. It could also be able to generate more creative and informative content, such as poems, stories, and essays.

If Google is successful, it could mean that Google Assistant will become even more helpful and versatile in the future.

Here are some of the potential benefits of a “supercharged” Google Assistant:

- It could be able to answer questions more accurately and more conversationally.

- It could generate more creative and informative content.

- It could translate languages more accurately and fluently.

- It could code and solve problems, using the same knowledge and skills as a human programmer.

- It could provide more personalized and helpful assistance, tailored to the individual user’s needs and preferences.

Of course, there are also some potential risks associated with a “supercharged” Google Assistant.

For example, it could be used to spread misinformation or to create deepfakes.

However, Google has a good track record of mitigating these risks, so it’s likely that any “supercharged” Google Assistant would be safe and reliable.

Google has already given a teaser of what’s to come. In an internal email, a senior Google executive said that the company is “exploring what a supercharged Assistant, powered by the latest LLM technology, would look like.” This suggests that Google is already working on integrating Bard with Google Assistant.

Here are some of the ways that Bard could enhance Google Assistant:

- A better understanding of natural language: Bard’s ability to understand natural language could make Google Assistant much more helpful and conversational. For example, Bard could be used to answer your questions in a more natural way or to follow up on your requests with additional information.

- More creative and informative content: Bard’s ability to generate creative content could make Google Assistant more engaging and informative. For example, Bard could be used to generate personalized stories, poems, or articles.

- Personalized recommendations: Bard’s ability to understand your interests could be used to make personalized recommendations. For example, Bard could be used to recommend music, movies, or books that you might enjoy.

- More helpful and versatile tasks: Bard’s ability to understand and follow instructions could be used to make Google Assistant more helpful and versatile. For example, Bard could be used to book appointments, make reservations, or control smart home devices.

Application areas for integrating LLMs with GUIs

- Summarizing on-screen content: This could be used to provide a quick overview of the content on a screen, without having to read through it all. For example, if you’re looking at a product page on a website, you could use an LLM to summarize the key features of the product.

- Answering questions based on on-screen content: This could be used to answer questions about the content on a screen, without having to click through menus or search for information. For example, if you’re looking at a map, you could use an LLM to answer questions about the location of specific landmarks.

- Assigning UI functions to language prompts: This could be used to control the actions of a GUI using natural language. For example, you could use an LLM to open a new tab in a web browser or to play a song on a music player.

These are just a few of the possibilities for integrating LLMs with GUIs. As this technology continues to develop, we can expect to see even more innovative and creative ways to use it.

Mapping instruction to UI action

The “mapping instruction to UI action” is the most promising area of application for integrating LLMs with GUIs. This could revolutionize the way we interact with our devices, making it much more natural and efficient.

For example, imagine being able to control your phone using natural language prompts. You could say something like, “Open the Spotify app” or “Set the cellular network mode to 4G”. The virtual assistant would then take care of the rest, opening the app or changing the settings for you.

This would be a huge leap forward in the way we interact with our devices. It would be much more natural and efficient than having to click through menus or type in commands. It would also be more convenient, as you wouldn’t have to take your hands off of whatever you’re doing to interact with your phone.

It’s very excited to see how this technology develops in the future. It has the potential to make our interactions with our devices much more natural, efficient, and convenient.

Overall, the future of virtual assistants is very bright. With the development of LLMs and other AI technologies, virtual assistants are becoming more powerful and versatile. It’s going to be quite surprising to see how this technology develops in the future and how it changes the way we interact with our devices.