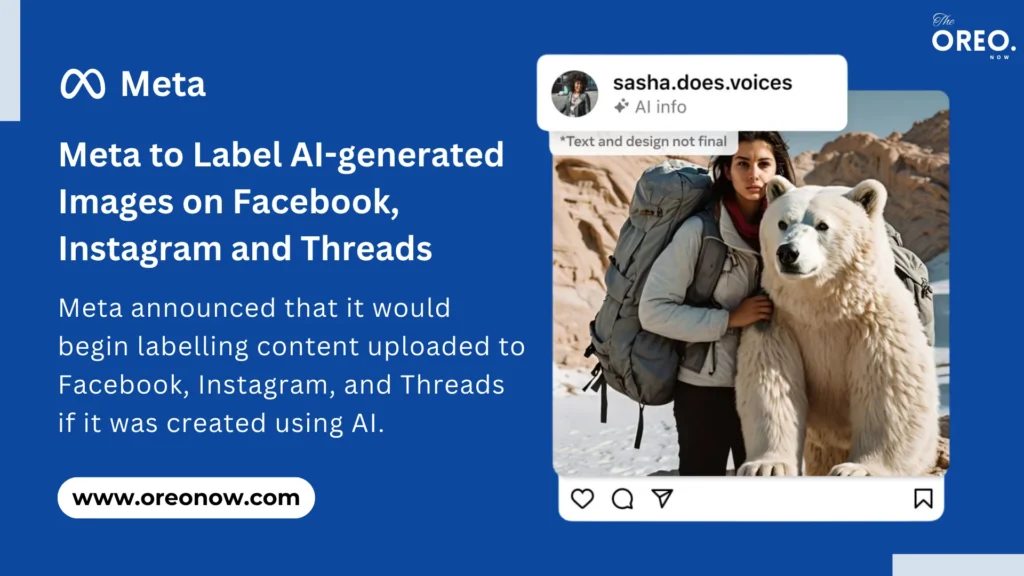

Meta Labelling AI-generated Images on Facebook, Instagram and Threads

Meta recently announced that it will be labelling AI-generated images on their platforms, including Facebook, Instagram, and Threads. The purpose of this attempt is to avoid potential misuse of this technology and to promote transparency.

With its announcement of plans to label AI-generated photographs on Facebook, Instagram, and Threads, the social media giant took a big step toward openness and responsible use of this quickly developing technology. As AI image tools become more advanced in creating photorealistic creations, the line between real and fabricated blurs. This may promote false information, manipulate audiences, and pose issues to online trust.

Why is Meta Labelling AI-generated Images?

Imagine scrolling through your Instagram feed, captivated by a breathtaking landscape unlike any you’ve seen before. Lush colours paint the scene, impossible rock formations pierce the sky, and yet, something feels subtly off. Is it a photographer’s masterpiece or a figment of artificial intelligence? Thanks to Meta’s latest initiative, you will be able to know for sure.

Meta’s solution – A simple yet impactful label: “Imagined with AI.” This tag will show up if any image is identified as AI-generated, regardless of the tool used. Whether it’s a dreamy scene created by their own AI or a fantastical creature created by tools like OpenAI’s DALL-E 2, users will be informed of the image’s artificial origin.

Was This Necessary?

Transparency gives users power. Making educated decisions is made possible by understanding that an image is an artificial intelligence (AI) created. Users can assess the content critically, recognize its limitations, and refrain from disseminating false information. Labelling also helps to build trust. By disclosing that AI-generated material exists, Meta shows that it is dedicated to eradicating false information and maintaining the integrity of the platform.

Challenges Meta will Face in Labelling AI-generated Images

Labelling AI-generated images is surely challenging. Strong detection systems are necessary for identifying AI-generated photos, particularly those derived from a variety of tools. Although Meta has this ability for their works, it is still a work in progress to recognize external content. They currently rely on individuals sharing AI-generated voice and video to disclose themselves.

This project is an important step toward developing AI-generated content responsibly. Meta establishes an example for other platforms to follow by empowering users and offering openness. Making sure people engage with AI responsibly and with knowledge is essential as technology continues to transform our online experience. The label “Imagined with AI” isn’t just a disclaimer; it’s a bridge towards a more transparent and trustworthy digital landscape.

Related Posts

AMD Work Graphs: Will CPU Become More Obsolete in Gaming?

MSI G4101 GPU Server: New Cutting-Edge GPU Server Unveiled at NAB Show 2024